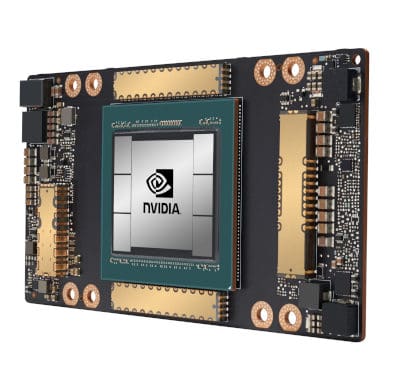

NVIDIA TESLA P100

- GPU Memory: 16 CoWoS HBM2

- CUDA Cores: 3584

- Single-Precision Performance: 9.3 TeraFLOPS

- System Interface: x16 PCIe Gen3

About

NVIDIA® Tesla® P100 GPU accelerators are the most advanced ever built for the data center. They tap into the new NVIDIA Pascal™ GPU architecture to deliver the world’s fastest compute node with higher performance than hundreds of slower commodity nodes. Higher performance with fewer, lightning-fast nodes enables data centers to dramatically increase throughput while also saving money.

With over 400 HPC applications accelerated—including 9 out of top 10—as well as all deep learning frameworks, every HPC customer can now deploy accelerators in their data centers.

Specification

Specifications

GPU Architecture

NVIDIA Pascal

CUDA Parallel Processing cores

3584

Double-Precision Performance

5.3 TeraFLOPS

Single-Precision Performance

10.6 TeraFLOPS

Half-Precision Performance

21.2 TeraFLOPS

GPU Memory

16 GB CoWoS HBM2

Memory Bandwidth

720 GB/s

Interconnect

NVIDIA NVLink

Max Power Consumption

300 W

ECC

Native support with no capacity or performance overhead

Thermal Solution

Passive

Form Factor

SXM2

Compute APIs

NVIDIA CUDA, DirectCompute, OpenCL™, OpenACC

You May Also Like

Related products

-

NVIDIA TESLA P40

SKU: N/AMore Information- GPU Memory: 24 GB

- CUDA Cores: 3840

- Single-Precision Performance: 12 TeraFLOPS

- System Interface: x16 PCIe Gen3

-

NVIDIA RTX A6000

SKU: 900-5G133-2500-000More Information- GPU Memory: 48 GB GDDR6 with error-correcting code (ECC)

- CUDA Core: 10,752

- PCI Express Gen 4

-

NVIDIA A100 TENSOR CORE GPU

SKU: N/AMore Information- GPU Memory: 40 GB

- Peak FP16 Tensor Core: 312 TF

- System Interface: 4/8 SXM on NVIDIA HGX A100

Our Customers

Previous

Next