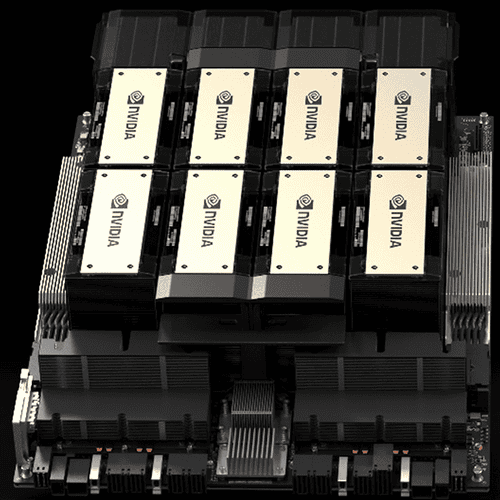

NVIDIA HGX H200

- 141GB of HBM3e GPU memory

- 4.8TB/s of memory bandwidth

- 4 petaFLOPS of FP8 performance

- 2X LLM inference performance

- 110X HPC performance

About

The GPU for Generative AI and HPC

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.

Specification

HGX H200

FP64

34 TFLOPS

FP64 Tensor Core

67 TFLOPS

FP32

67 TFLOPS

TF32 Tensor Core

989 TFLOPS2²

BFLOAT16 Tensor Core

1,979 TFLOPS²

FP16 Tensor Core

1,979 TFLOPS²

FP8 Tensor Core

3,958 TFLOPS²

INT8 Tensor Core

3,958 TFLOPS²

GPU memory

141GB

GPU memory bandwidth

4.8TB/s

Decoders

7 NVDEC

7 JPEG

Confidential Computing

Supported

Max thermal design power (TDP)

Up to 700W (configurable)

Multi-Instance GPUs

Up to 7 MIGs @16.5GB each

Form factor

SXM

Interconnect

NVIDIA NVLink®: 900GB/s

PCIe Gen5: 128GB/s

Server options

NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs

NVIDIA AI Enterprise

Add-on

You May Also Like

Related products

-

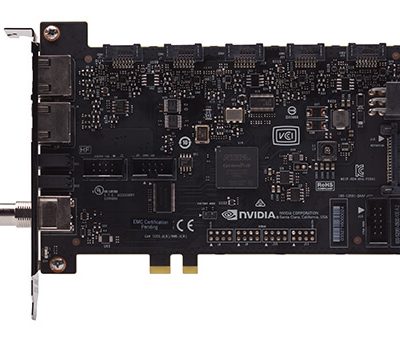

Quadro Sync II

SKU: 900-52061-0000-000More InformationEnabling up to 32 synchronized displays

Powering up to 32 4K displays for entertainment outlets, sporting events.Projector Overlay Support

Multiple projectors can be used to review design concepts or changes at scale.Stereoscopic Display Support

Making a stereoscopic 3D display wall for a research lab while utilizing up to 32 displays with just one system -

NVIDIA QUADRO RTX8000 PASSIVE

SKU: 900-2G150-0050-000More Information- GPU Memory: 48 GB GDDR6 with ECC

- CUDA Cores: 4608

- NVIDIA Tensor Cores: 576

- NVIDIA RT Cores: 72

-

NVIDIA JETSON™ TX2

SKU: N/AMore Information- GPU Memory: 8GB 128-bit LPDDR4 Memory

- GPU: 256-core NVIDIA Pascal™ GPU architecture with 256 NVIDIA CUDA cores

- CPU: Dual-Core NVIDIA Denver 2 64-Bit CPU, Quad-Core ARM® Cortex®-A57 MPCore

Our Customers

Previous

Next