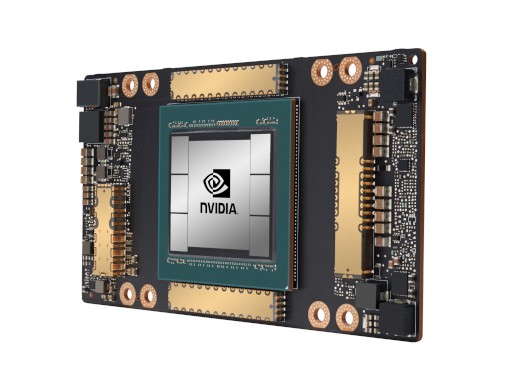

A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from NGC™. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale.

NVIDIA A100 TENSOR CORE GPU

- GPU Memory: 40 GB

- Peak FP16 Tensor Core: 312 TF

- System Interface: 4/8 SXM on NVIDIA HGX A100

About

The Most Powerful End-to-End AI and HPC Data Center Platform

Specification

Specifications

Peak FP64

9.7 TF

Peak FP64 Tensor Core

19.5 TF

Peak FP32

19.5 TF

Peak FP32 Tensor Core

156 TF | 312 TF*

Peak BFLOAT16 Tensor Core

312 TF | 624 TF*

Peak FP16 Tensor Core

312 TF | 624 TF*

Peak INT8 Tensor Core

624 TOPS | 1,248 TOPS*

Peak INT4 Tensor Core

1,248 TOPS | 2,496 TOPS*

GPU Memory

40 GB

GPU Memory Bandwidth

1,555 GB/s

Interconnect

NVIDIA NVLink 600 GB/s

PCIe Gen4 64 GB/s

Multi-instance GPUs

Various instance sizes with up to 7MIGs @5GB

Form Factor

4/8 SXM on NVIDIA HGX™ A100

Max TDP Power

400W

You May Also Like

Related products

-

NVIDIA H100

SKU: 900-21010-0000-000Take an order-of-magnitude leap inaccelerated computing. The NVIDIA H100 Tensor Core GPU delivers unprecedented performance,scalability, and security for every workload. With NVIDIA® NVLink® SwitchSystem, up to 256 H100 GPUs can be connected to accelerate exascaleworkloads, while the dedicated Transformer Engine supports trillion-parameter language models. H100 uses breakthrough innovations in theNVIDIA Hopper™ architecture to deliver industry-leading ...More Information -

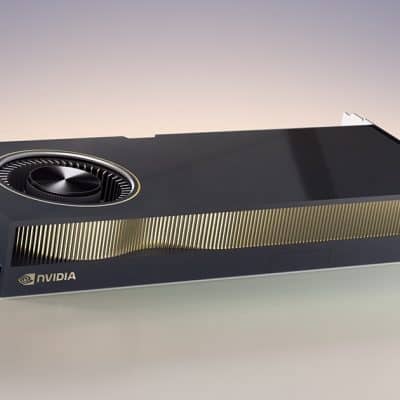

NVIDIA RTX 6000 Ada Generation

SKU: 900-5G133-2550-000More Information- NVIDIA Ada Lovelace Architecture

- DP 1.4 (4)

- PCI Express 4.0 x16

-

NVIDIA A100 PCIE GPU

SKU: 900-21001-0000-000More Information- GPU Memory: 40 GB

- Peak FP16 Tensor Core: 312 TF

- System Interface: PCIe

Our Customers

Previous

Next