About

CUDA is a parallel computing platform and programming model developed by Nvidia for general computing on its own GPUs (graphics processing units). CUDA enables developers to speed up compute-intensive applications by harnessing the power of GPUs for the parallelizable part of the computation.

This post is a super simple introduction to CUDA, the popular parallel computing platform and programming model from NVIDIA. I wrote a previous “Easy Introduction” to CUDA in 2013 that has been very popular over the years. But CUDA programming has gotten easier, and GPUs have gotten much faster, so it’s time for an updated (and even easier) introduction.

CUDA C++ is just one of the ways you can create massively parallel applications with CUDA. It lets you use the powerful C++ programming language to develop high performance algorithms accelerated by thousands of parallel threads running on GPUs. Many developers have accelerated their computation- and bandwidth-hungry applications this way, including the libraries and frameworks that underpin the ongoing revolution in artificial intelligence known as Deep Learning.

Specification

You May Also Like

Related products

-

THEANO

SKU: N/ATheano is a Python library that lets you to define, optimize, and evaluate mathematical expressions, especially ones with multi-dimensional arrays (numpy.ndarray). Using Theano it is possible to attain speeds rivaling hand-crafted C implementations for problems involving large amounts of data. It can also surpass C on a CPU by many orders of magnitude by taking ...More Information -

NVIDIA TESLA P100

SKU: N/AMore Information- GPU Memory: 16 CoWoS HBM2

- CUDA Cores: 3584

- Single-Precision Performance: 9.3 TeraFLOPS

- System Interface: x16 PCIe Gen3

-

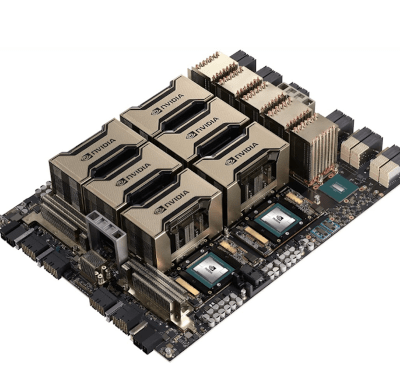

NVIDIA HGX A100 (8-GPU)

SKU: N/AMore Information- 8X NVIDIA A100 GPUS WITH 320 GB TOTAL GPU MEMORY

- 6X NVIDIA NVSWITCHES

- 320 GB MEMORY

- 4.8 TB/s TOTAL AGGREGATE BANDWIDTH

Our Customers