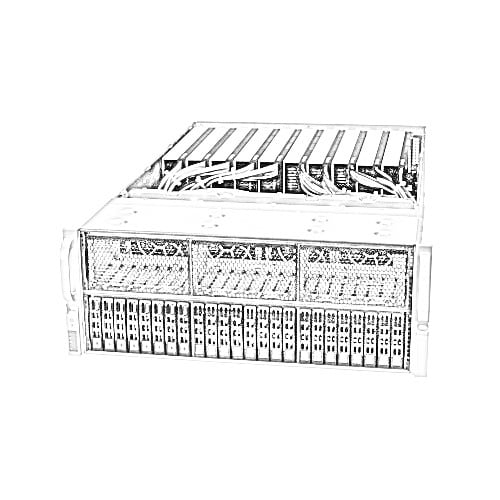

10 GPU 2 XEON DEEP LEARNING AI SERVER

- GPU: 10 NVIDIA H100, A100, L40, A40, RTX6000, 2-slot GPU

- CPU: 2 4th Generation Intel Xeon Scalable Processors

- System Memory: 8 TB (32 DIMM)

- STORAGE: NVME

About

XINMATRIX® 10 GPU Deep Learning System is a scalable and configurable 4U Rackmount System with up to 10 Double Width NVIDIA GPU. Dual Intel latest Xeon Scalable Processor. The amount of GPU, CPU, System Memory and Storage are configurable according to requirements.

SYMMATRIX provides full range of AI GPU Systems from 1 to 10 GPU custom to your requirements.

Specification

Specification

GPU

Options:

Up to 10 Nvidia H100, A100, L40, A40, RTX6000 and 2-slot GPU

Up to 10 Nvidia H100, A100, L40, A40, RTX6000 and 2-slot GPU

CPU

4th Generation Intel® Xeon® Scalable Processors

System Memory

32 DIMM slots

8TB

Storage

NVME

Network

2X 10GbE ports + 1X IPMI

Dual-Port InfiniBand or other high speed PCIe card (Optional)

System Weight

Gross Weight ~ 45 KG

System Dimension

4U Rackmount

H 178mm, W 437mm

Depth 740mm

Maximum Power Requirements

2+2 2700W Redundant Titanium Level power supplies

Operating Temperature Range

10° C ~ 35° C (50° F ~ 95° F)

Support & Warranty

Three or Five Years on-site parts and services, NBD 8x5

You May Also Like

Related products

-

2 GPU 2 EPYC DEEP LEARNING AI SERVER

SKU: SMXB8252T75More Information- GPU : 2 NVIDIA RTXA6000, A40, RTX8000, T4

- CPU: 128 CORES (2 AMD EPYC ROME)

- PCIe Gen 4.0 support

- System Memory: 4 TB (32 DIMM)

- 26 2.5" SATA/NVMe U.2 SSD Hotswap bays, 2 NVMe M.2 SSD

-

10 GPU 2 XEON DEEP LEARNING AI SERVER

SKU: SMXB7119FT83More Information- GPU: 10 NVIDIA A100, A40, A30, V100, RTXA6000, RTXA5000

- NVLINK: 4 NVLINK

- CPU: 80 CORES (2 Intel Xeon Scalable), Single/Dual Root

- System Memory: 8 TB (32 DIMM)

- STORAGE: 12 3.5" SATA SSD/HDD OR NVMe PCIe U.2

-

8 GPU 2 EPYC DEEP LEARNING AI SERVER

SKU: SMX-GS4845More Information- GPU : 8 NVIDIA A100, V100, RTXA6000, RTX8000, A40

- NVLINK : 4 NVLINK

- CPU: 128 CORES (2 AMD EPYC ROME)

- PCIe Gen 4.0 support

- System Memory: 4 TB (32 DIMM)

- Type A: 12 3.5" SATA/NVMe U.2 Hotswap bays

- Type B: 24 2.5" SATA/SAS NVMe U.2 Hotswap bays

Our Customers

Previous

Next