NVIDIA GPU AI Servers

-

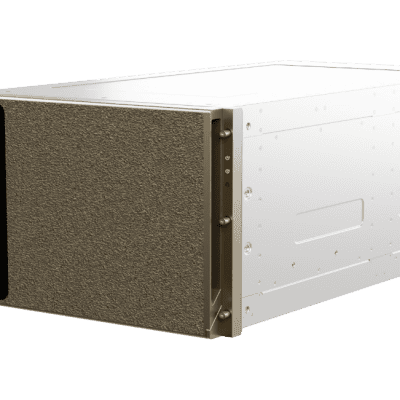

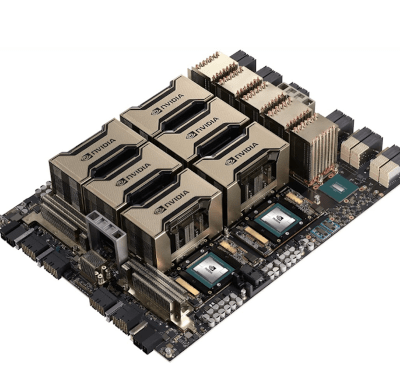

NVIDIA DGX B200

SKU: 900-2G133-0010-000-1More Information- 8x NVIDIA Blackwell GPUs

- 1,440GB total GPU memory

- 72 petaFLOPS training and 144 petaFLOPS inference

- 2 Intel® Xeon® Platinum 8570 Processors

-

NVIDIA DGX Rubin NVL8

SKU: N/AMore Information- 8x NVIDIA Rubin GPUs

- 2x Intel® Xeon® 6776P processors

- 2.3 TB GPU Memory

- NVFP4 Inference: 400 PF

- NVFP4 Training: 280 PF

- FP8/FP6 Training: 140 PF

- NVLink 28.8 TB/s total bandwidth

-

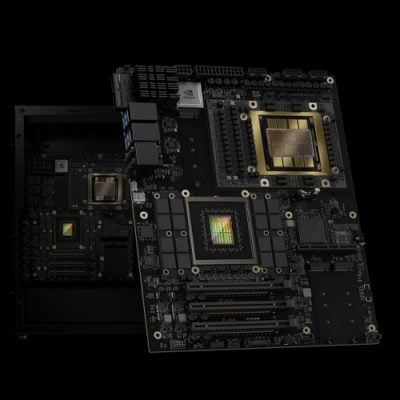

NVIDIA GB300 NVL72

SKU: N/AMore Information- AI Reasoning Inference

- 288 GB of HBM3e

- NVIDIA Blackwell Architecture

- NVIDIA ConnectX-8 SuperNIC

- NVIDIA Grace CPU

- Fifth-Generation NVIDIA NVLink

-

NVIDIA DGX B300

SKU: N/AMore Information- Built with NVIDIA Blackwell Ultra GPUs

- 2.3TB of GPU memory space

- 72 petaFLOPS of training performance

- 144 petaFLOPS of inference performance

- NVIDIA networking

- Intel® Xeon® 6776P Processors

- Foundation of NVIDIA DGX BasePOD™ and NVIDIA DGX SuperPOD™

- Leverages NVIDIA AI Enterprise and NVIDIA Mission Control software

-

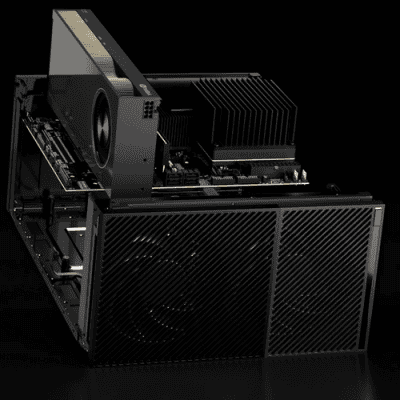

NVIDIA DGX GB300

SKU: N/AMore Information- Built on NVIDIA GB300 Grace Blackwell Ultra Superchips

- Scalable up to tens of thousands of GB300 Superchips with NVIDIA DGX SuperPO

- 72 NVIDIA Blackwell Ultra GPUs connected as one with NVIDIA® NVLink®

- Efficient, 100% liquid-cooled, rack- scale design

- NVIDIA networking

- Leverages NVIDIA AI Enterprise and NVIDIA Mission Control software

-

NVIDIA DGX H200

SKU: 900-2G133-0010-000-1-1More Information- 8x NVIDIA H200 GPUs with 1,128GBs of Total GPU Memory

- 4x NVIDIA NVSwitches™

- 10x NVIDIA ConnectX®-7 400Gb/s Network Interface

- Dual Intel Xeon Platinum 8480C processors

- 30TB NVMe SSD

-

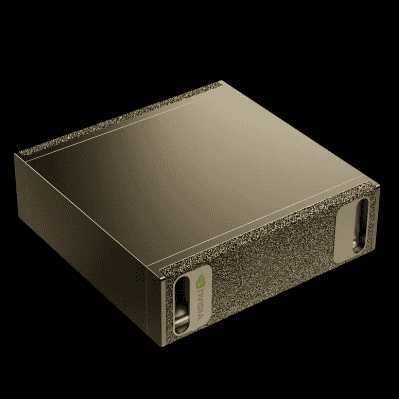

NVIDIA DGX Spark GB10

SKU: N/AMore Information- Built on NVIDIA GB10 Grace Blackwell Superchip

- NVIDIA Blackwell GPU with fifth-generation Tensor Core technology

- NVIDIA Grace CPU with 20- core high-performance Arm architecture

- Up to 1 petaFLOP of AI performance using FP4

- 128 GB of coherent, unified system memory

- Support for up to 200 billion parameter model

- NVIDIA ConnectX™ networking to link two systems to work with models up to 405 billion parameters

- Up to 4 TB of NVMe storage

- Compact desktop form factor

-

NVIDIA DGX Station GB300

SKU: N/AMore Information- NVIDIA Grace Blackwell Ultra Desktop Superchip

- Fifth-Generation Tensor Cores

- NVIDIA ConnectX-8 SuperNIC

- NVIDIA DGX OS

- NVIDIA NVLink-C2C Interconnect

- Large Coherent Memory for AI

-

NVIDIA IGX Orin

SKU: N/AMore Information- Enterprise Support

- Unmatched Performance

- Proactive AI Safety

- Robust, Comprehensive Security

-

NVIDIA DGX GH200

SKU: DGX GH200-1More Information- 32x NVIDIA Grace Hopper Superchips, interconnected with NVIDIA NVLink

- Massive, shared GPU memory space of 19.5TB

- 900 gigabytes per second (GB/s) GPU-to-GPU bandwidth

- 128 petaFLOPS of FP8 AI performance

-

NVIDIA DGX H100

SKU: DGX H100More Information- 8X NvidiaH100 Gpus With 640 Gigabytes of total gpu memory

- 4x Nvidia Nvswitches

- 8X Nvidia Connect-7 and 2x Nvidia bluefield dpu 400 gigabits-per-second network interface

- Dual x86 CPUs and 2 Terabytes of system memory

- 30 Terabytes NVME SSD

-

NVIDIA DGX A100

SKU: DGXA-2530A+P2CMI00More Information- 8X NVIDIA A100 GPUS WITH 320 GB TOTAL GPU MEMORY

- 6X NVIDIA NVSWITCHES

- 9X MELLANOX CONNECTX-6 200Gb/S NETWORK INTERFACE

- DUAL 64-CORE AMD CPUs AND 1 TB SYSTEM MEMORY

- 15 TB GEN4 NVME SSD

-

NVIDIA DGX STATION A100 320GB/160GB

SKU: DGXS-2080C+P2CMI00More Information- 2.5 petaFLOPS of performance

- World-class AI platform, with no complicated installation or IT help needed

- Server-grade, plug-and-go, and doesn’t require data center power and cooling

- 4 fully interconnected NVIDIA A100 Tensor Core GPUs and up to 320 gigabytes (GB) of GPU memory

-

NVIDIA HGX A100 (8-GPU)

SKU: N/AMore Information- 8X NVIDIA A100 GPUS WITH 320 GB TOTAL GPU MEMORY

- 6X NVIDIA NVSWITCHES

- 320 GB MEMORY

- 4.8 TB/s TOTAL AGGREGATE BANDWIDTH

-

1 NVIDIA GH200 GRACE HOPPER

SKU: GPU ARS-111GL-SHRMore Information- NVIDIA GH200 Grace Hopper™ Superchip, up to 72 cores 144GB

- Up to 480GB ECC LPDDR5X embedded on the NVIDIA Superchip

- Up to 3 PCIe 5.0 x16 FHFL slots

- Up to 8 front hot-swap E1.S NVMe drive bays

- 1U Rackmount chassis

-

10 GPU, H200, RTX Pro 6000, EPYC

SKU: 4U10G-TURIN2/RFMore Information- 4U Rackmount with 10X H200 NVL, RTX PRO 6000

- Dual Socket SP5 (LGA 6096), supports AMD EPYC™ 9005/9004 (with AMD 3D V-Cache™ Technology) and 97x4 series processors

- 12+12 DIMM slots (1DPC), supports DDR5 RDIMM, RDIMM-3DS

- 16 hot-swap 2.5" NVMe (PCIe5.0 x4) drive bays or 24 Hot-swap 2.5" SATA/SAS* drive bays *Additional RAID/HBA card required

-

10 GPU, H200, RTX Pro 6000, Xeon

SKU: 4U10G-GNR2/RFMore Information- 4U Rackmount with 10X H200 NVL 141GB, RTX PRO 6000 96GB

- Dual Socket E2 (LGA 4710), supports Intel® Xeon® 6700P-series, 6500P-series, and 6700E-series processors

- 16+16 DIMM slots (2DPC); supports DDR5 RDIMM, MRDIMM

- 18 hot-swap 2.5" NVMe (PCIe5.0 x4) drive bays

-

2 H200 DEEP LEARNING AI SERVER

SKU: SMX-R4051-2More Information- GPU: 2 NVIDIA H200 NVL 141GB 600W or 2 NVIDIA RTX PRO 6000 96GB Server 600W

- CPU: Single AMD EPYC 9965 192Cores 384T 500W Processor

- System Memory: 3TB (12 DIMM)

- STORAGE: NVME Gen 5

-

2 NVIDIA GH200 GRACE HOPPER

SKU: GPU ARS-221GL-NHIRMore Information- High density 2U 1-node GPU system with two Integrated NVIDIA GH200

- NVIDIA® NVLink® high speed interconnect between both GH200 Super Chips

- Up to 1248GB of coherent memory (system as a whole), including 960GB LPDDR5X and 288GB of HBM3e for LLM applications

- 4x PCIe 5.0 X16 supporting one NVIDIA Bluefield 3 and two connectX-7 cards

-

8 GPU H200, RTX Pro 6000, Xeon

SKU: 4UXGM-GNR2More Information- 4U NVIDIA MGX™ with 8x H200 or RTX Pro 6000 GPU

- Dual Socket E2 (LGA 4710), supports Intel® Xeon® 6700P-series, 6500P-series, and 6700E-series processors

- 16+16 DIMM slots (2DPC); supports DDR5 RDIMM, MRDIMM

- 16 Hot-swap E1.S (PCIe5.0 x4) drive bays

- 3+1, 80-PLUS Titanium, 3200W CRPS

- 2 RJ45 (1GbE) by Intel® i350

- Remote Management (IPMI)

-

8 GPU, H200, RTX Pro 6000, EPYC

SKU: 4UXGM-TURIN2More Information- 4U NVIDIA MGX™ with 8 NVIDIA H200, RTX PRO 6000 GPU

- Dual Socket SP5 (LGA 6096), supports AMD EPYC™ 9005/9004 (with AMD 3D V-Cache™ Technology) and 97x4 series processors

- 12+12 DIMM slots (1DPC), supports DDR5 RDIMM, RDIMM-3DS

- 16 Hot-swap E1.S (PCIe5.0 x4) drive bays

-

2 GPU 2 EPYC DEEP LEARNING AI SERVER

SKU: SMXB8252T75More Information- GPU : 2 NVIDIA RTXA6000, A40, RTX8000, T4

- CPU: 128 CORES (2 AMD EPYC ROME)

- PCIe Gen 4.0 support

- System Memory: 4 TB (32 DIMM)

- 26 2.5" SATA/NVMe U.2 SSD Hotswap bays, 2 NVMe M.2 SSD

-

4 GPU 1 EPYC DEEP LEARNING AI SERVER

SKU: SMXB8021G88More Information- GPU : 4 NVIDIA A100, V100, RTXA6000, A40, RTX8000, T4

- CPU: 64 CORES (1 AMD EPYC ROME)

- PCIe Gen 4.0 support

- System Memory: 2 TB (16 DIMM)

- 2 2.5" SATA SSD Hotswap bays, 2 NVMe M.2 SSD

- 1U Rackmount

-

4 GPU 1 XEON DEEP LEARNING AI SERVER

SKU: SMXB5631G88More Information- GPU: 4 NVIDIA A100, V100, RTXA6000, A40, RTX8000, T4

- CPU: 28 CORES (1 Intel Xeon Scalable)

- System Memory: 1.5 TB (12 DIMM)

- STORAGE: 2 2.5" SSD, 2 NVMe M.2 SSD

- 1U Rackmount

-

4 RTX Pro 6000 96GB AI WORKSTATION

SKU: SMX-DX4More Information- GPU: 4 NVIDIA RTX Pro 6000 96GB Blackwell

- Quiet Operation for Office/Lab

-

SMX H200 AI WORKSTATION

SKU: SMX STATION A100More Information- GPUs: 2x NVIDIA H200 141 GB GPUs

- GPU Memory: 282 GB total

- NVLink: NVLink Included

- CPU: Single AMD EPYC 9005 Turin 160Cores/320T

- System Memory: 8 x 256GB = 2TB

- Networking: Dual-port 10GbE, BMC management port

- Storage: NVME + SATA

- Software: NVIDIA NGC Package

-

2 GPU 2 EPYC DEEP LEARNING AI WORKSTATION

SKU: SMX-T0046More Information- GPU: 2 NVIDIA RTX Pro 6000 96GB MaxQ

- CPU: 2 AMD EPYC 9005, 2x 160 Cores

- System Memory: 3 TB (24 DIMM)

- NVME SSD

-

4 GPU 1 EPYC AI WORKSTATION

SKU: SMX-SE4More Information- GPU: 4 Dual Slot Active

- CPU: AMD EPYC 7003 64Cores

- System Memory: 1 TB (8 DIMM)

- NVME SSD

-

4 GPU 1 THREADRIPPER DEEP LEARNING AI WORKSTATION

SKU: SMX-ST4More Information- GPU: 4 RTX6000 ADA

- CPU: AMD Threadripper Pro 64Cores

- RAM: 1TB 8x 128GB

- NVME SSD

-

4 GPU 1 XEON DEEP LEARNING AI WORKSTATION

SKU: SMX-SX4More Information- GPU: 4 ACTIVE GPU

- CPU: Intel Xeon W 24Cores

- System Memory: 1 TB (8 DIMM)

- NVME SSD

Our Customers

Previous

Next